Inaturalist Integration

Posted

Recently I’ve become more interested in the flora and fauna seen out bushwalking and the reason for that is iNaturalist.

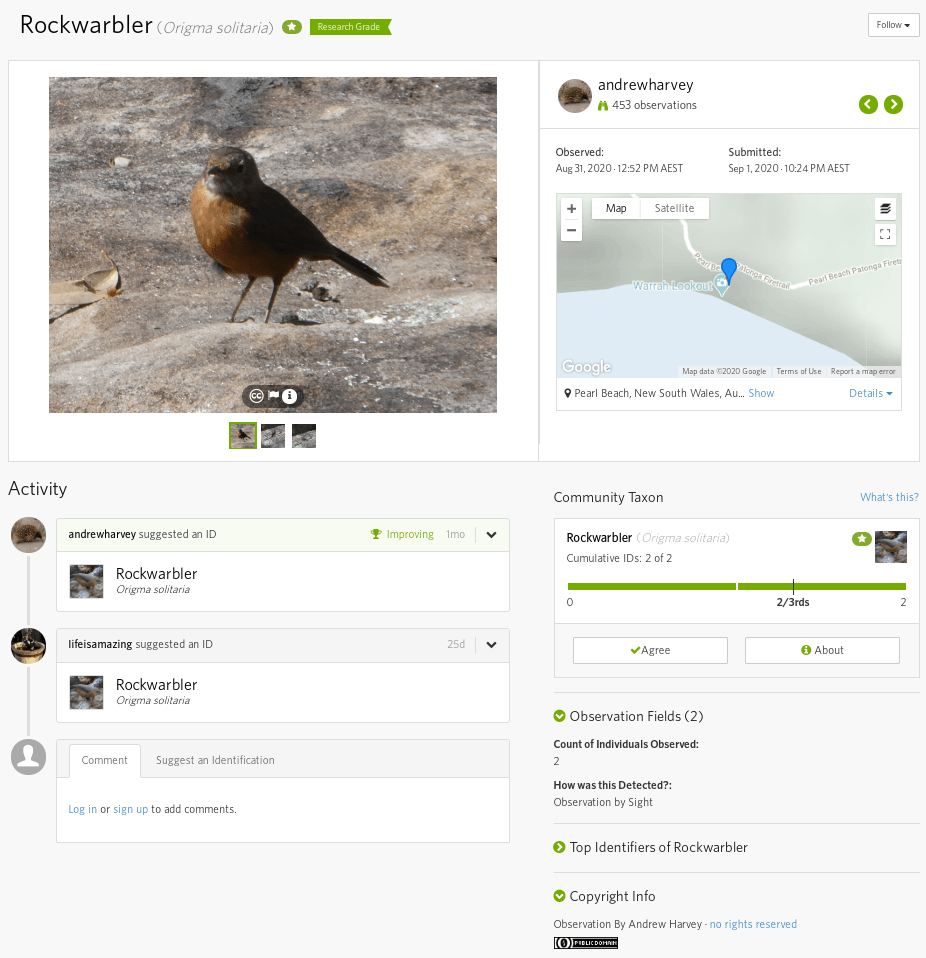

iNaturalist is a social media platform where anyone can submit their wildlife sightings with a focus on identifying the species observed. Anyone can submit an identification (known on the site as an ID) of what they think the species in your observation is, and vice versa you can help identify others observations.

As someone with no clue about natural history (I only just learnt what “natural history” is because I became more interested in the domain because of iNaturalist), the fact that the platform is open for anyone especially those who might not have any domain knowledge and easy to use make it attractive. Though ultimately what makes it work is the community behind it. I was blown away when I first uploaded some observations to have others add identifications within minutes of my upload.

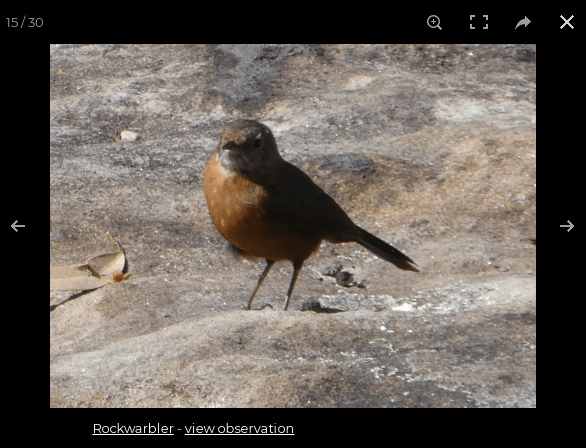

We have grand dreams of showing the kinds of wildlife you might encounter on each walk, but as a first step where walk photos are of wildlife and where it has been uploaded to iNaturalist we now show the species (or higher level classification) with the photo as determined by iNaturalist.

The way BeyondTracks was built, all photos are ingested from Flickr, so for each photo we know the Flicker ID. iNaturalist has an option to import a photo from Flickr and doing so the observation will now be linked to the Flickr ID.

iNaturalist has two APIs, the newer one at https://api.inaturalist.org/v1/docs/ even comes with a Node library which I first tried to use, but unfortuantly it doesn’t return the photo source and Flickr ID (https://github.com/inaturalist/iNaturalistAPI/issues/188).

However the older API at https://www.inaturalist.org/pages/api+reference does return the photo source information, so I scrapped using the Node library and instead retrieved the details directly

const obs = []

for (const username of inatUsers) {

let pageObs = []

let page = 1

while (page === 1 || pageObs.length) {

process.stdout.write(`Request observations for ${username} page ${page}...`)

const res = await got(`http://inaturalist.org/observations/${username}.json?page=${page}&per_page=200`)

pageObs = JSON.parse(res.body)

process.stdout.write(` ${pageObs.length}\n`)

obs.push(...pageObs)

page++

}

}